The Foundations of Video Artificial Intelligence

Share on Social

Artificial Intelligence (AI) refers to a broad set of approaches for allowing computers to mimic human abilities. This is distinct from automation, which is the process of creating hardware or software capable of conducting tasks without human intervention. AI is likely required to automate complex jobs, but they are not the synonyms some marketers try to make them. As Rev begins to introduce some exciting new AI features this fall, it is important to understand the fundamentals of how AI works first. The next post will focus on AI powered video in the real world.

Fundamental Disciplines of Modern Artificial Intelligence

The most common method being used today is Machine Learning, where massive amounts of data are “fed” into an algorithm in order to train it. Once trained, the algorithm will be able to identify and then categorize items in subsequent data feeds unassisted. Machine Learning algorithms use an iterative process, so as the learning models get exposed to new data, they adapt from what they have “learned.”

A key shortcoming of machine learning, which we will continue to reference across more advanced skills, is the reliance on vast amounts of sample data in order to become accurate enough to use. Thus, current applications of Machine Learning are limited depending on sources of high quality input data. If you are interested in learning more about Machine Learning, SAS has an in-depth write up of it here.

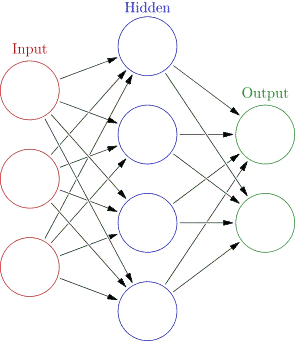

Deep learning is actually a subtype of machine learning, allowing an AI to predict outputs given a set of inputs. A key significance here is that Deep Learning will leverage Neural Networks algorithms, which are inspired by the functions and structure of organic brains. Deep Learning is popular for applications where vast amounts of unstructured data needs to be analyzed, where human-driven analysis would be impractical.

Another key discipline, and one that we will focus the most attention to, is Computer Vision. In Computer Vision, the goal is to interpret the visual elements of an image or video using Artificial Intelligence. Computer Vision may use either Machine Learning or Deep Learning techniques to accomplish this goal, and is the foundation of emerging technology applications such as Facial Recognition and automated vehicles. Teaching computers to process visual data just as a human would has proven much harder than simply connecting algorithms to cameras.

Another key discipline, and one that we will focus the most attention to, is Computer Vision. In Computer Vision, the goal is to interpret the visual elements of an image or video using Artificial Intelligence. Computer Vision may use either Machine Learning or Deep Learning techniques to accomplish this goal, and is the foundation of emerging technology applications such as Facial Recognition and automated vehicles. Teaching computers to process visual data just as a human would has proven much harder than simply connecting algorithms to cameras.

Much of our challenge is rooted in an only basic understanding of how human vision actually works in order to replicate it. Despite this, Computer Vision is currently one of the most exciting facets of AI for business strategists, with 58% of purchase influencers beginning to plan computer vision investments in their enterprise technology portfolio within the next year according to Forrester.

Video AI Building Blocks: Automating Voice and Text Applications

Spoken words are a critical component of video and there are a number of ways that AI is helping interpret speech. Machine Transcription is an example of one of the earliest examples of Artificial Intelligence, where an algorithm is able to interpret voice data into a text transcript. This technology is now commonplace and even cooked into our smartphones, but is also undergoing a renaissance thanks to innovative new deep learning techniques becoming available.

Machine Transcription

Machine Transcription enables users to search through the dialogue of the video and make it more widely accessible via Closed Captions. This technology has been around for broadcast television, voice mail services, and live events for many years, but deep learning is now helping to improve the accuracy and speed of the transcription. Machine transcription is sometimes called Automatic Speech Recognition (ASR), you can read more about its long history here.

Machine Translation

Once spoken words are digested into text data, it unlocks other abilities like translation into additional languages. This is a daunting task because there are many combinations within the original language and gray areas of translating into target language. This problem has plagued humans for eons, which is why we have the cultural idiom “lost in translation,” which is when the full meaning of a message has been lost after translating it to a new language.

One of the key AI pioneers in this field has been Google, who first launched their translation service in 2006, using United Nations & European Parliament transcripts as the foundation linguistic data. As of May 2017, Google supported over 100 languages and was serving 500 million people daily. In late 2016, Google has long demonstrated strength in automated translation of webpages, but has now developed AI’s capable of translating spoken language in real-time while replicating the voice of the user.

Speaker Recognition

Another AI technology that is coming to maturity is voice-based Speaker Recognition. This is the ability of an AI to recognize the identity of a speaker based off their voice and speech patterns. A key dependency of this ability is an existing sample of the person’s voice to train the AI on.

Currently this is being applied with voice assistants, when using voice commands to unlock a device, activate a service, or receive personalized question responses based off asker identity. In the video world, combining Speaker Recognition with Machine Transcription can show us what a subject said at any point during a long recording.

Optical Character Recognition (OCR)

OCR is the art of recognizing text from within visual content, such as the text on embedded presentation slides. The primary benefit of OCR in the business world is further enabling search engines to offer up visual content to users without over dependence on accurate and comprehensive metadata.

This enables the rediscovery of important small details, which may have not been major enough to highlight in the video overview, such as a single statistic or chart buried within a long business presentation. Another use case is translating embedded text within an image.

Sentiment Analysis

Another way to enrich text data is via an additional layer of information called sentiment. This algorithm interprets dialogue to both identify and quantify affective states. Affective states are distinct from emotions, as affective states are longer lasting mood states (such as anxiety or depression) which are the results of many events.

Affective states are easier to detect than emotions as they are generally expressed in drawn-out patterns, while emotions are often only expressed subtly (sometimes only expressed visually). Affective states are what scientists will measure when their subjects are not capable of expressing (or even potentially feeling) human emotions. It is logical that this would be a key skill for AIs to master before they could gain accuracy analyzing much more complex and nuanced emotional states (which often confuse human observers themselves).

Text Summarization

One of the newer text applications that will help build the next generation of video Artificial Intelligence is content summarization. This is when an algorithm is able to boil down hours of video into a concise text summary. Summarization algorithms will take into account the placement or emphasis of messages within a video.

Newer approaches interpret the meaning of each sentence and find common themes to highlight across all of the sentences. From a business perspective, algorithms will soon be able to extract key information such as action items from a project team meeting. Another application we can expect to see is much improved automated reports, with generated insights based off contextual data.

This gives us an overview of the key concepts shaping Video AI for businesses. In our next post you can expect a much deeper dive into how computer vision is rapidly evolving along these concepts and promising a business world prolific with high quality video content. If you would like to see how Vbrick is implementing Video AI features into our product roadmap, be sure to register for our webinar on Video AI on September 19.